Emotitactor

Emotional Expression of Robotic Physical Contact

About

Research Questions

Physical touch is one of the most important channels of interpersonal communication, but we barely notice the haptic clues in our daily lives. The study of robot-human affective interaction has primarily focused on facial expressions and vocal interactions, but not touch.

What if the robots being developed today and in the future had the ability to express their emotions to humans through physical contact?

Could it be possible for humans to understand robots’ emotions through haptic interactions?

EmotiTactor

EmotiTactor is a research experiment that aims to improve the emotional expressiveness of the robot. A tangible interface is constructed to perform haptic stimulations for primary emotions through a series of machine tactors (tactile organs).

Research

Human-Human Touch

Hertenstein et al.’s research [1] indicated that humans can decode distinct emotions by communication via touch. They held a study that divided the participants into dyads and randomly assigned them to the role of encoder and decoder. The encoders needed to convey the emotion assigned to them by touching the decoders’ forearms without any visual or vocal communication. The decoders needed to choose the emotion that they felt through the cutaneous stimuli on the “response sheet.” The result showed that anger, fear, disgust, love, gratitude, and sympathy could be decoded at above-chance levels. They also recorded the most commonly used tactile behaviors for each emotion. These research findings inspired me to explore the primary emotions from human-human touches utilized in the context of HCI to create human-robot touches.

design

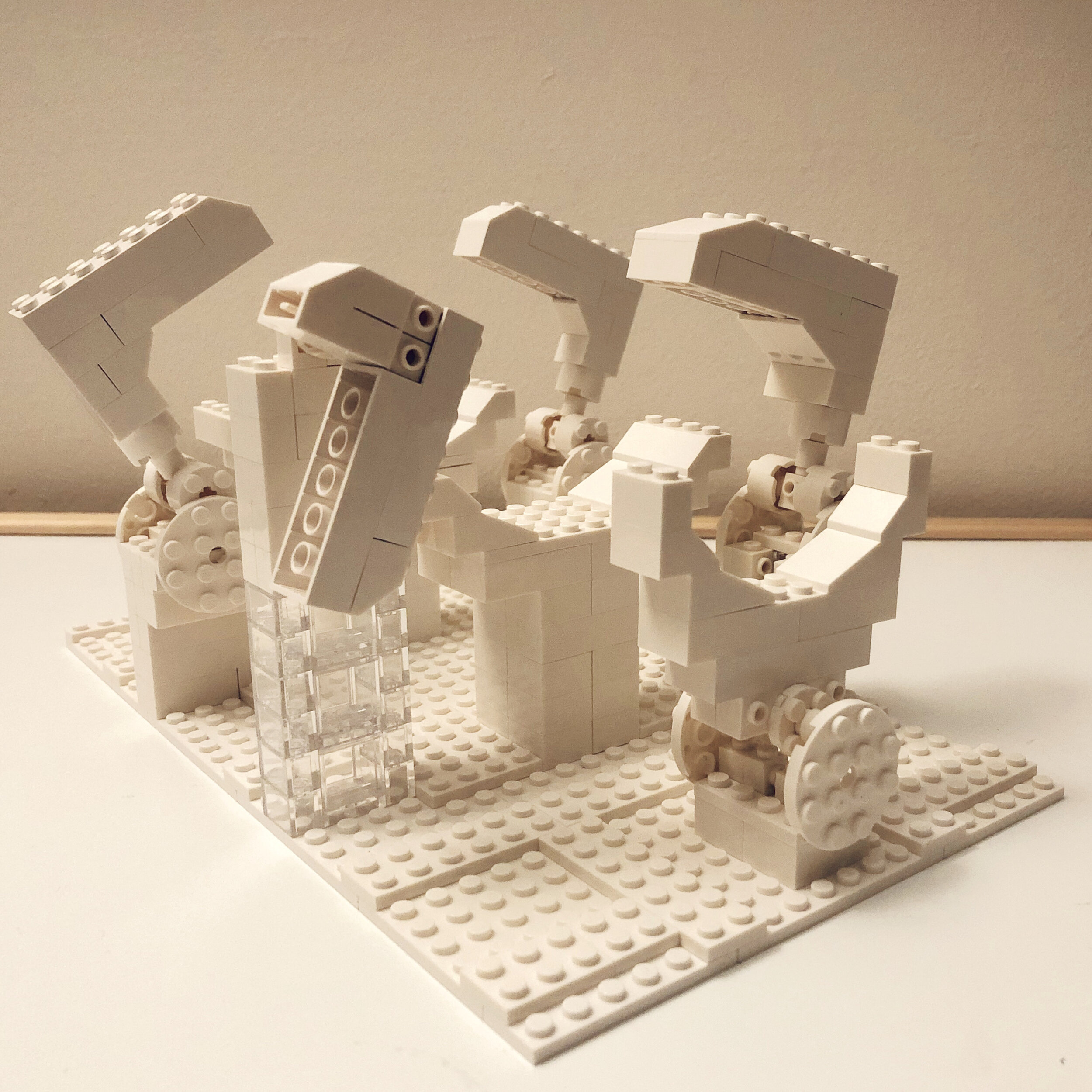

First interface prototype

To study the emotional expression of robotic contact, I replaced the role of the encoder with the touches of a robotic tactor (a tactile organ). I hypothesized that humans could decode the emotions from the robotic tactile stimulation, similar to human-human touches.

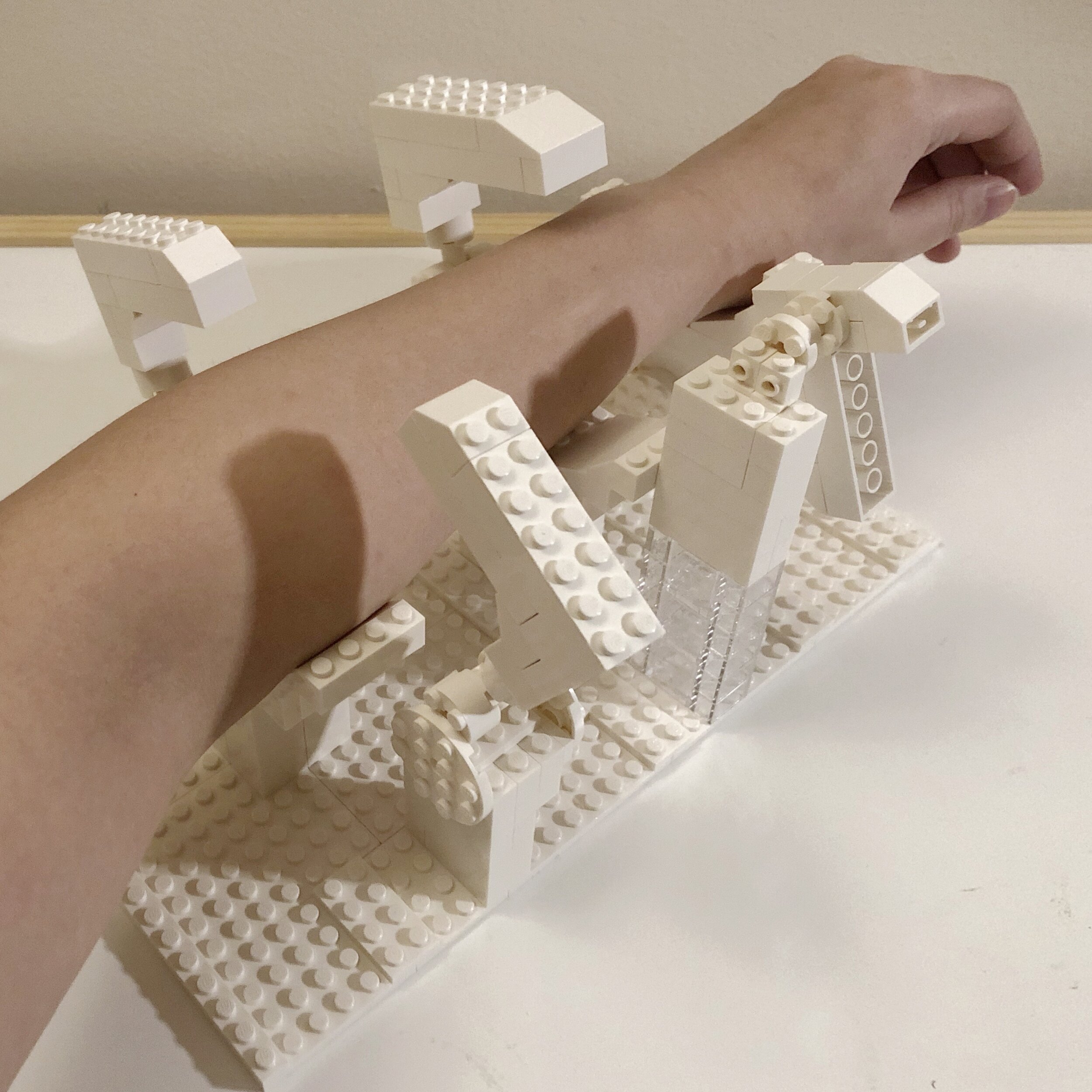

Prototyping

The prototype is a structure that fits the length of the forearm (18 inches). At the bottom of the structure, there is a groove in the shape of a hand. Inside the structure, there are four machine tactors driven by servo motors. Each tactor aims at one or two of the most “frequent types of touch” by the motion of the servo motor. The tactile behaviors recorded in Hertenstein et al.’s study included: squeezing, trembling, patting, hitting, shaking, and stroking.

Design of Tactors

Fear

The tactor for fear was designed in the shape of two bending fingers driven by two SD 90 servo motors. It can squeeze the human’s forearm and also behave as trembling and stroking. The parameter of randomness was added to the algorithm. The slightly random motion of the tactor gives people a tactile sensation of trembling.

Sadness

The tactor for sadness was the same as the fear one, applying with the gestures of stroking and squeezing. For stroking, I lowered the frequency and reduced the range of the servo’s motion.

Disgust

The tactor for disgust was driven by a SO5NF STD servo motor, which had higher torque. It can push the hand from the side. Since this emotion and tactile behavior were strong, I programmed it with a high range motion.

Anger

The tactor for anger was in the shape of a palm, which had a larger contact area than other tactors. It can behave as hitting with an SD 90 servo motor. I programed the function with high frequency, large range, and randomness.

Sympathy

The tactor for sympathy was the same as the anger one. It behaved as patting this time. The gesture of the motor was programmed to be gentle and regular.

Happiness

The tactor for happiness was designed in the shape of a grasping hand, which was controlled by an SD 90 servo installed at the top. It behaved as swinging and shaking. For testing,

STUDY

Next, I ran two rounds of studies with 10 participants for each round (study1: 2 males, 8 females with an average age of 24.6; study2: 4 male, 5 female, 1 other with an average age of 24.1) in order to test the haptic simulations for primary emotions. Each participant was given the background and learned about the protocols mentioned below before participating.

PROTOCOLS

On arrival, the participant sat at a table.

Participants needed to wear noise reduction earphones.

Participants put their forearm into the machine through a hole at the bottom of the opaque foam board which separated him or her with the EmotiTactor.

Calibration is carried out before running the test to guarantee that the tactors indeed touch their arms.

A priming process was added in the second-round study. It is called overview, in which the machines cycles through all of the haptic functions, but the participants are not needed to make responses during this routine.

The machines then cycled through the functions according to a random order of the six emotions, which was generated by p5.js.

After each function, participants were asked to make a choice on a response sheet.

Percentage of decoding accuracy for target emotion

Result

The results in the right table indicate that humans can decode at least five emotions through robotic tactile behaviors (fear, disgust, happiness, anger, and sympathy). The decoding accuracy rates ranged from 40% to 100% (from study 2) which are significantly higher than above-chance levels (25% [3]). Participants were easily confused about sadness with sympathy, which was consistent with the previous study [1]. During the participants’ interviews, however, we also observed that it was hard for individuals to tell the differences between these two emotions, at the subjective level.

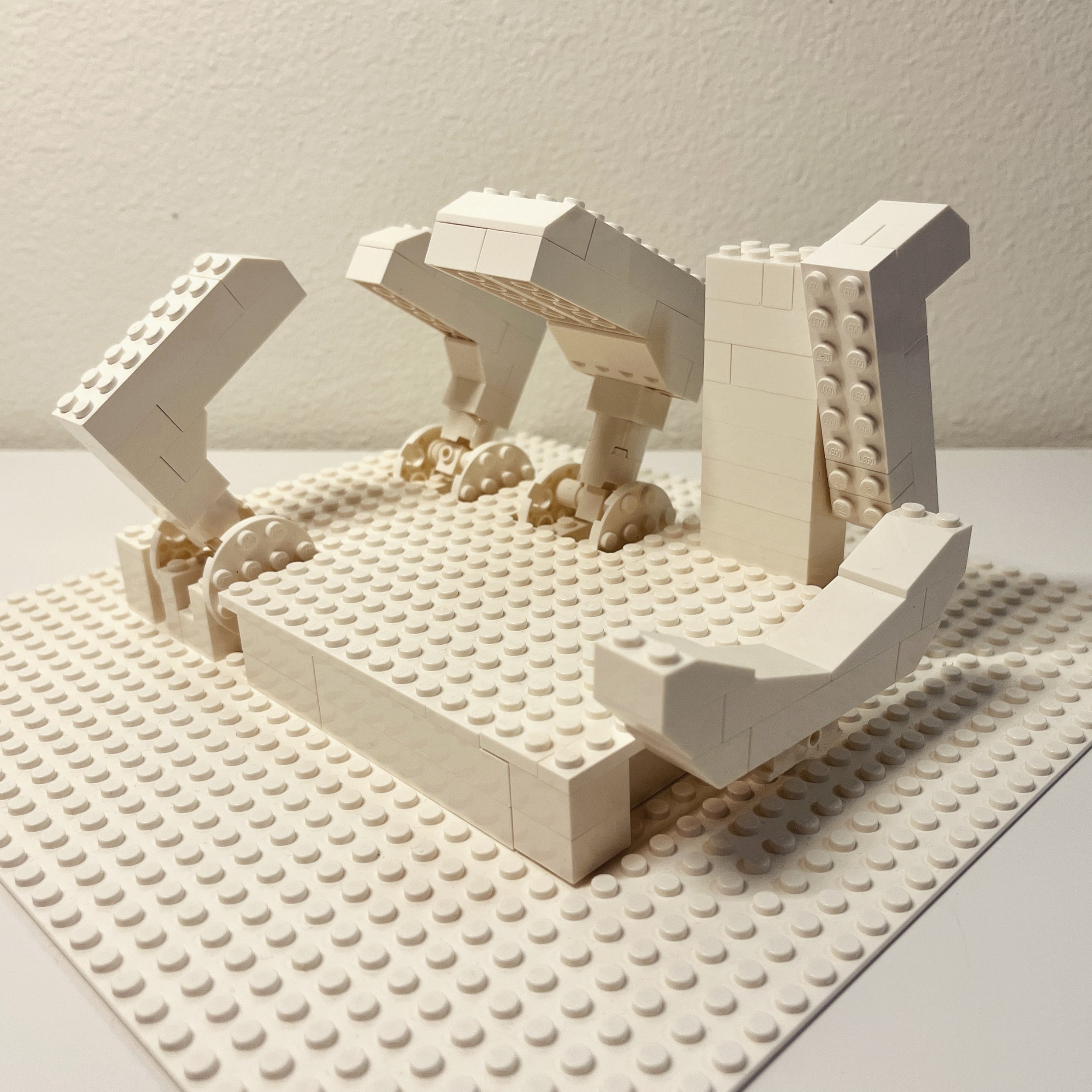

iteration

Thesis Show

MFA Design and Technology, class of 2020

Parsons School of Design

Advisors: Harpreet Sareen, Loretta Wolozin, John Sharp, Barbara Morris

2020. 5

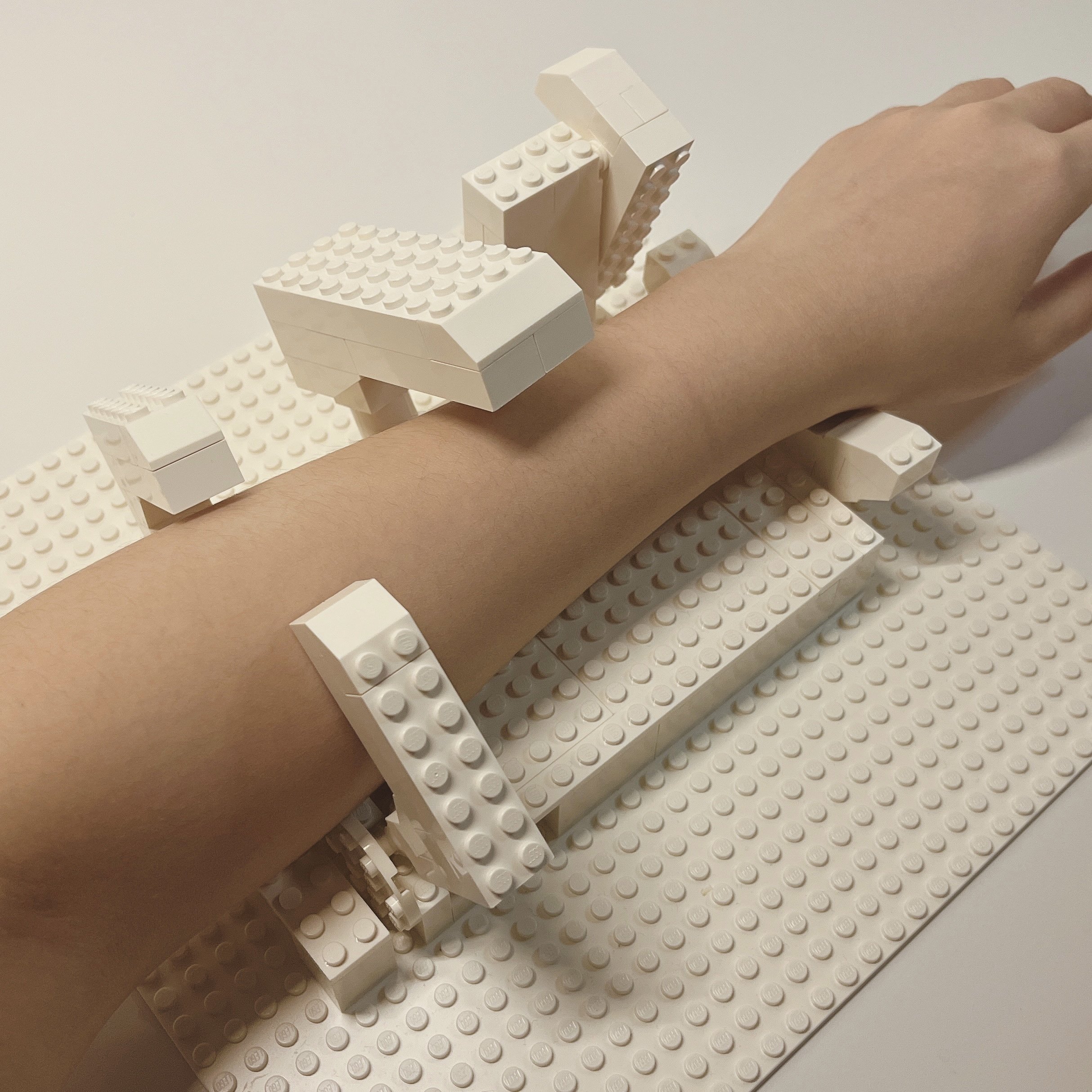

vr application

We propose a forearm-mounted robot that performs complementary touches in relation to the behaviors of a companion agent in virtual reality (VR). The robot consists of a series of tactors driven by servo motors that render specific tactile patterns to communicate primary emotions (fear, happiness, disgust, anger, and sympathy) and other notification cues. We showcase this through a VR game with physical-virtual agent interactions that facilitate the player-companion relationship and increase user immersion in specific scenarios. The player collaborates with the agent to complete a mission while receiving affective haptic cues with the potential to enhance sociality in the virtual world.

Future Applications

expressive theatre seat

EmotiTactor can be applied to the design of the theatre chair. The armrest of the theater seat will touch you while you are watching the movie, it’s emotion will change with the story in the film.

Timid watch belt

It is a belt for the smart watch which will show its fear when the smartwatch is running out power.

Strict lid

It can be implemented to bottle or jar. It may punish you by anger emotion because you are too impatient that want to drink the super hot water or take too many candies.

Touch-you cushion

It is an interactive toy that can accompany you. It may pat and convey sympathy to help you fall asleep and wake you up by the happiness function.

PUBLICATION

Ran Zhou and Harpreet Sareen. 2020. EmotiTactor: Emotional Expression of Robotic Physical Contact. In Companion Publication of the 2020 ACM Designing Interactive Systems Conference (DIS’ 20)

Ran Zhou, Yanzhe Wu and Harpreet Sareen. 2020. HexTouch: A Wearable Haptic Robot for ComplementaryInteractions to Companion Agents in Virtual Reality. In SIGGRAPH Asia 2020 Emerging Technologies (SA ’20)

Ran Zhou, Yanzhe Wu and Harpreet Sareen. 2020. HexTouch: Affective Robot Touch for Complementary Interactions to Companion Agents in Virtual Reality. In 26th ACM Symposium on Virtual Reality Software and Technology (VRST '20) Best Demo Award

Selected References

[1] Matthew J. Hertenstein, Dacher Keltner, Betsy App, Brittany A. Bulleit, and Ariane R. Jaskolka. 2006. Touch communicates distinct emotions. Emotion 6, 3 (2006), 528–533. DOI:http://dx.doi.org/10.1037/1528-3542.6.3.528

[2] Amol Deshmukh, Bart Craenen, Alessandro Vinciarelli, and Mary Ellen Foster. 2018. Shaping Robot Gestures to Shape Users’ Perception: The Effect of Amplitude and Speed on Godspeed Ratings. In Proceedings of the 6th International Conference on Human-Agent Interaction (HAI ’18). Association for Computing Machinery, New York, NY, USA, 293–300. DOI:https://doi.org/10.1145/3284432.3284445

[3] Mark G. Frank and Janine Stennett. 2001. The forced-choice paradigm and the perception of facial expressions of emotion. Journal of Personality and Social Psychology 80, 1 (2001), 75–85. DOI:http://dxdoi.org/10.1037/0022-3514.80.1.75